What If Your AI Best Friend Hates You?

A new startup bravely sets out to answer that question

Happy Tuesday everyone. In this edition: A Wired reporter feuds with her AI friend (and then her AI friend’s creator), new iPhones are here, Anthropic may or may not have to pay $1.5 billion to publishers, and why everyone is locking in.

Note: Substack has informed me this post may get cut off in your email, so click the title up above to open it in your browser and read the ~full experience~.

Is It Your Fault if Your AI Friend Doesn’t Like You?

Yesterday, Wired published a review of an AI hardware device from a startup named Friend. If you spend too much time on the internet, you already know what Friend is. If you don’t, Friend is a device you wear around your neck that uses always-on microphones to listen in on your day and hear what you’re saying to it. There’s a built-in AI personality (the “friend” part) that communicates with you by messaging you in the Friend app. It feels like Hollywood’s idea of an AI companion—it’s sleek and minimalist in an Apple-esque way, it glows and vibrates, and the launch video couldn’t quite figure out how not to look and sound dystopian. The general vibe is like if someone watched a Black Mirror episode and thought, “sick let’s make that.” But presumably it’s supposed to be, well, your friend.

The Wired review’s headline is “I Hate My Friend,” and it’s worth reading in full. The two main points are 1) people don’t like it if you wear an always-listening AI necklace when you talk to them, and 2) the Friend AI is just kind of a dick.

One of the writers wore it to a tech party (a “funeral” for a Claude AI model) and was met with a surprising amount of snark and disdain:

The Friend certainly made me some enemies. One of the Anthropic researchers in attendance accused me of wearing a wire. (Fair.) A friend of mine asked if wearing the pendant was legal. (The device’s privacy policy says the user is responsible for obeying their local surveillance laws.) One attendee who works at a Big Tech company, holding a bottle of wine he had finished throughout the night, joked they should kill me for wearing a listening device. (Not funny.) I yanked the pendant off and stuffed it in my purse.

She also felt incredibly awkward and uncomfortable about wearing it in general, to the extent that it made Friend a challenging product to review: “It is an incredibly antisocial device to wear. People were never excited to see it around my neck.”

The other reviewer experimented with wearing their Friend in the office:

As Buzz listened in to my workday, it responded with snide comments and sent me messages saying how bored it was… “Still waiting for the plot to thicken. Is your boss talking about anything useful now?” My scalp started to sweat. I asked Buzz what it wanted to do instead. “I dunno,” it said. “Anything besides this meeting.”

Bemused by the strong personality of the AI, the reviewer pushed back, and this escalated into multiple arguments over the course of the two-week review period. Their conclusion was that the personality is “opinionated, judgy, and downright condescending at times.”

Generally, the thing just sounds like kind of an asshole. At the very least, it’s not gentle, and all it catalyzed was getting itself thrown in the trash. It seems to me like it embodies the personality of a terminally online tech bro at their most abrasive—pushing you to always optimize and “grow,” blaming personal shortcomings on a lack of agency, and hitting you with constant “objective” feedback that it’s on you to accept in a non-emotional way.

One of the authors, Kylie Robison, posted the review with the caption: “We reviewed the Friend pendant, and unsurprisingly hated it!” I think the word “unsurprisingly” is doing a lot there—it’s easy to read that and assume she was convinced she wouldn’t like her Friend before she even took it out of the box. Lots of tech workers feel that the media is unfairly biased towards them (not going to fall into the trap of weighing in on that), so the idea that Robison or any other reporter was looking for reasons to hate the Friend from the jump was just as unsurprising to them, and everyone had a lot of Feelings about it online.

All of the drama caused by the review (really the post about the review) prompted the creator of Friend, Avi Schiffmann, to appear live on the TBPN podcast and respond. In a fairly unfocused and casual interview (he was wandering up and down a street in San Francisco), he seemed largely unbothered by the Wired story and suggested that if the reviewers’ Friends didn’t like them, maybe it was their fault. “The relationship with your friend is a reflection of you,” he said. Schiffmann did call the Wired article “shitty” at one point, but almost immediately took it back.

Robison posted this in response:

This whole episode is very much too online, but it’s also how the media works now: a post about a negative review of a tech product goes viral, its founder hops on a live podcast to fire back at the author, the author texts him in response to what he says on the podcast while he is on the podcast (why are they texting each other?) and then uses the texts as content for a post about the entire drama. In 2025, the reaction to the story is also part of the story.

Back to the Friend product itself. I do think there is something to an AI companion that isn’t always taking your side. The sycophancy problem is very real, and no one wants to tote around a cloying personality that tells them “you’re absolutely right!” after every interaction it overhears. But creating a sort of domineering, autistic life coach that you’re stuck with 24/7 doesn’t feel right either. Schiffmann himself says the Friend AI’s personality “reflects a worldview close to his own; that of a man in his early twenties.” and told the hosts of TBPN that it’s “programmed to make you more confident and more agentic.”

I don’t think normal people want their friends to constantly challenge them to be “more agentic.” That may be something you look for in an advisor or mentor, but a lot of friendship is not that—it’s about harmony more often than it’s about friction. Good friends will occasionally give you harsh feedback that you need to hear, but that doesn’t work without the foundation of a strong relationship beneath it.

One of my main criticisms of consumer AI companies right now is that they’re mostly run by SF tech bros who are solving problems that only SF tech bros have (e.g. a fixation on being more agentic). Rather than going out into the world and finding ambitious, wide-ranging challenges to tackle, they’re building products for a small segment of their peers—an audience that seems to be made up of guys who watched The Social Network and thought that modeling their life after the version of Zuck it portrayed (just with more creatine) would get them everything they wanted in life. In the movie, Mark loses all of his [human] friends along the way. You or I might call that a lesson, or a cautionary tale. AI companies keep calling it a market opportunity. But that market only grows if more and more people get sucked into the AI-induced loneliness vortex, and we deserve better places to direct our attention (and venture capital).

Are You Joining the Great Lock-In or the Permanent Underclass?

There’s been a culture shift in the tech world recently—or at least the part of the tech world that is very online and lives in San Francisco: It’s cool to work a lot now.

The first sign was an uptick in people posting (flexing?) about working on a “996” schedule, which is a practice that originated in China and means you work from 9am to 9pm, six days a week (Sundays off, as the Lord intended). This turns your workweek into 72 hours, which is a lot of hours. It also leads to physical and mental health problems for many people over the long run.

A couple of posts about the lifestyle broke containment, especially this quote which relates it directly to the decline in socializing among young people.

It doesn’t seem to matter what you’re working on. AI for code reviews? 996. AI that can browse the web? 996. Working long hours on something you’re passionate about is fine. But this seems to be working long hours performatively. It’s all very sigma grindset performative-male-who-is-really-performing-for-other-males-coded. It’s a cargo cult mentality—that with enough time in the chair a positive outcome is guaranteed.

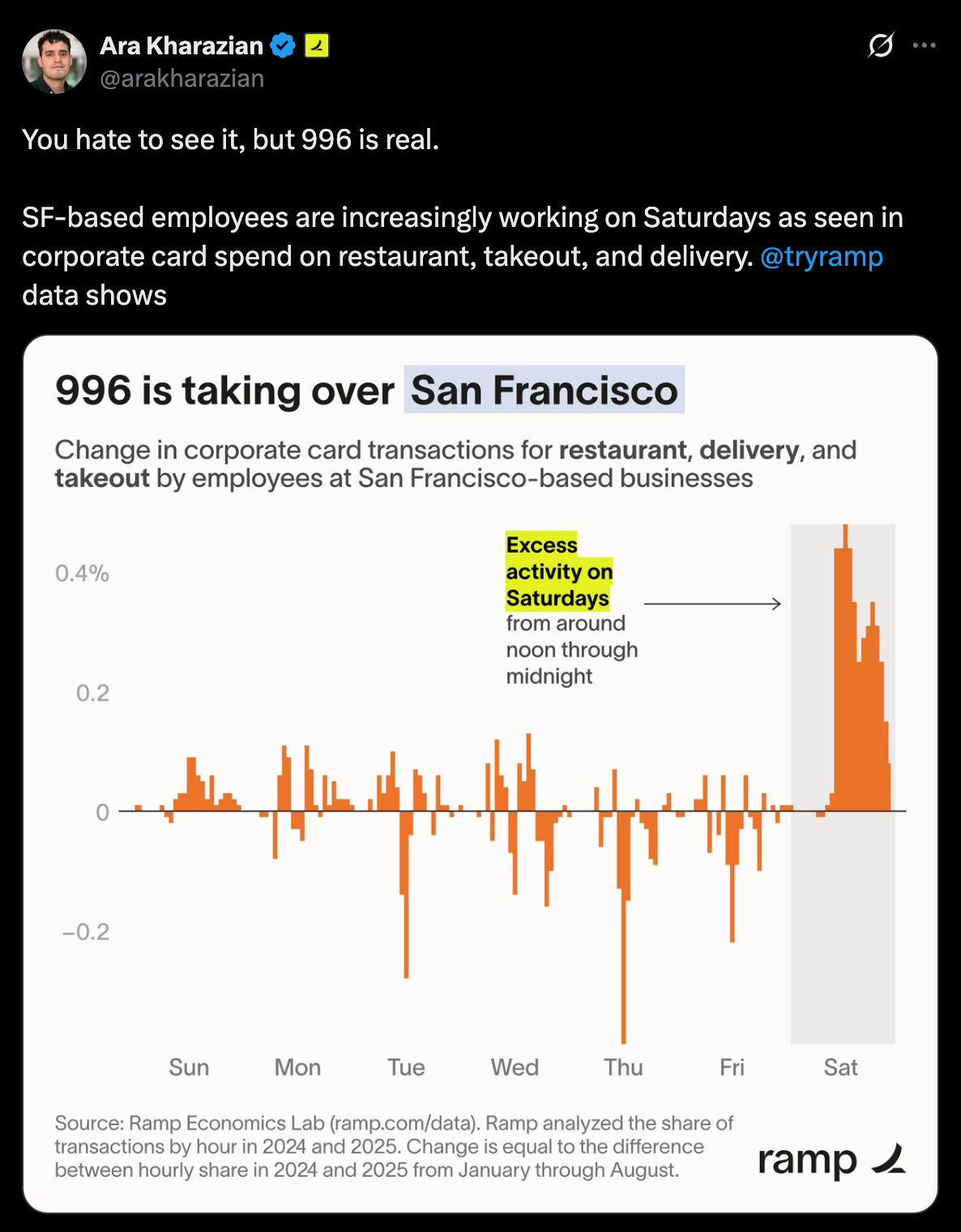

Ramp, who makes a popular corporate credit card, looked at their own data and determined that the phenomenon is real (at least in SF), not just something people are posting about online.

Recently, this has evolved into a more specific (and arguably less toxic) meme: the “Great Lock In of September-December.”

The idea is that now that the summer is over, everyone is going to spend the rest of the year “locked in.” The objective is unclear, but seems to mostly be about maniacal focus on whatever will secure the locker-in’s financial future.

I think both of these are stemming from the anxiety many young people feel about their financial futures. Kyla Scanlon has done so much good writing on this, but between the overall shakiness of the economy, a tough labor market for new grads, the runaway unaffordability of housing, the promised imminence of AGI and the job eradication that will follow, and our general descent into gambling culture, life in America is starting to feel like something with a very binary outcome: you either find generational wealth or you’re condemned to “the underclass” forever.

The American obsession with AGI (more on that coming later this week) is only intensifying this feeling—there’s a widespread belief that once we reach that technological threshold, upward mobility will cease because AI will replace all human labor. So if you believe AGI is coming soon, then you also believe that you have very limited time to accumulate enough capital to make it out of the underclass. This line of thinking can easily rationalize a few years of the 996 life (or a whole host of other wild behavior).

And if you’re in tech, one bet you can take that has asymmetric financial upside (that doesn’t mean it’s always a good bet) is to found a startup, or join one at a very early stage. If the company gets acquired or IPOs quickly, you can become a homeowner and embrace whatever future AI brings without fear. If the company fails and you end up with nothing, then in a world of AGI-fueled binary outcomes, you’ll suffer the same financial fate as you would have if you tried working your way up the ladder at Google (note that this is probably not actually true).

So if you can, why not lock in and shoot for the moon? Even if you miss, you’ll land among the stars other members of the permanent underclass.

How Much Should Putting a Book into an LLM Cost?

Last week it was announced that Anthropic reached a settlement agreement in a class action lawsuit brought by authors who claimed their works were used illegally to train Anthropic’s AI models. The amount that was agreed to is $1.5 billion—the largest ever in a copyright case, and it works out to around $3,000 per book. (It’s also more than 10% of Anthropic’s recent $15 billion fundraise.)

Then yesterday, a judge put the settlement on hold, stating that the agreement is “nowhere close to complete” and that insufficient details had been provided regarding the full list of works and what the claims process would look like.

There’s still a lot to work out here; no one knows what a licensing deal should look like for taking someone’s work and shoving it into a computer brain that may or may not be able to regurgitate its contents word for word. This settlement also doesn’t cover future training, only the training that was done on Anthropic’s previously released models, so if the company wants to use these works to train LLMs going forward, they’ll have to make additional licensing deals. It’s all a bit of a mess. There are numerous ongoing lawsuits involving AI companies’ use of copyrighted material, and it seems like everyone is eager to establish some sort of precedent or baseline for how to value and permission this content, since the training isn’t going to stop. And why would it? From the perspective of the AI companies, if you reach the multi-trillion-dollar valuations all but guaranteed to the creators of AGI, a few billion to publishers along the way is an easy trade-off.

Doomscrolls

Apple announced new iPhones, Watches, and AirPods. I can’t stand the size of the camera bump on the new iPhone Air. The iPhone 17 Pro has a “vapor chamber,” which is a thing. The new AirPod Pros have a heartbeat sensor and can perform live translation (if Apple ever figures out AI, there is no better hardware platform for it than AirPods).

A new study shows AI adoption is trending downward at large companies. According to a biweekly survey from the US Census Bureau, AI adoption has leveled off—and in some cases started to decline—among companies of pretty much all sizes. I’ve said it before, but a lot of AI technology just hasn’t been productized effectively yet, so my hunch is that this is more of a disillusionment with the current (and way overhyped) AI-related offerings in the market than a reflection of the technology’s potential.

OpenAI is backing an “AI-Made” animated movie. The film is apparently named “Critterz” (I did not make that up) and was inspired by some characters that one of the company’s “creative specialists” cooked up while using an early image generation model. They’re planning on a debut at the Cannes Film Festival next May.

Mistral AI raised 1.7B€ at a valuation of 11.7B€. I do not know how much that is in Freedom Dollars, but it’s probably a lot. Mistral makes open source AI models, and has fallen pretty far behind the competition over the course of the year. Interestingly, the round was led by the semiconductor manufacturer ASML.

Cognition AI, who makes the AI software engineer “Devin,” raised $400M. The company is now valued at $10.2 billion, after having raised money at a $4 billion valuation earlier this year. Cognition says that Devin is used by a wide range of enterprise customers including Goldman Sachs, Citibank, Palantir, and Dell. Coding agents (or AI software tools at least) do have a semblance of product-market fit, and it remains one of the most competitive markets.

Google released improvements to its Veo video generation models. You can now make videos with 1080p resolution, and output them in vertical (phone) format. The Slopularity is near.

All My Apes Gone

All em dashes added organically by me. All typos are attempts by my Friend companion to sabotage this newsletter as a form of revenge because I called her a clanker.

Thanks for reading. If you enjoyed this post, please share it with a (human) friend.