Friday Linkslop: Some Reading Recs

A new Context Rot weekly feature

Hi everyone. Context Rot Media considers ourselves1 to be deeply committed to the spirit of innovation, and it is with this in mind that we’re introducing a new weekly feature.

Today is the inaugural edition of the Friday Rot Roundup: a version of the letter that is basically just a list of links to articles or websites that I think are worth your time. At the very least, they were worth mine, and if all else fails just reply @grok is this true in the comments and you’ll get a summary riddled with emoji bullet points.2

I’ll be experimenting with the format quite a bit during this early period, so let me know what you love/hate/makes you cringe right out of this email 📲.

Quick News Dump

Anthropic is raising $10 billion. Meta hired a former Apple AI exec (what hiring freeze?) and is paying Google $10 billion for cloud infrastructure. Elon asked Zuck to help him buy OpenAI earlier this year. He also thinks his AI goonbots will somehow increase the birth rate. Snap struggles, signals it may spin out Spectacles. Airbnb cofounder Joe Gebbia is the new United States Chief Design Officer, but will he stop the government accounts from posting deportation AI slop? Someone in California has the plague. Saturn’s rings are falling off (we used to be a real solar system).

The Rot Roundup: Weekend Reads

OpenAI is quietly amassing a team of democratic political operatives to grow its influence within California. There’s a lot beneath the surface here—OpenAI is still figuring out how to cleanly separate the non-profit and for-profit sides of its business, and they’re in a vulnerable position if rivals like Elon continue to wield lots of political influence in a country dominated by the Republican Party. Context Rot’s politics bureau tells me Sam Altman is contributing to a number of Super PACs on his own, including American Bridge and We Deserve Better.

The Times has a great series of retrospectives on influential video games that were released 25 years ago, including The Sims and Counter-Strike. Throw on this playlist and read about Tony Hawk’s Pro Skater 2, or my favorite from the list, Majora’s Mask (incredible kicker in that last one).

If you’ve been on Twitter/X at all these last few weeks, you’ve seen the “donald boat” account repeatedly going viral for (successfully) lobbying several well-known accounts to buy him the various parts he needed for his gaming PC. You can read the complete story on his Substack, as told through a chronological series of tweets. Social networks are special places.

Kyle Chayka wrote about IRL Brain Rot, his word for real-world cultural aesthetics that don’t make any sense when removed from the context of the internet trends that spawned them. It’s interesting to think about the recent labubumatchadubaichocolate-ification of everything as being partly a deliberately garish and absurdist reaction to the hyper-smooth and optimized lifestyles being sold to us by tech companies, d2c brands, and immortal unc. (Can you believe that was a real sentence I just typed?)

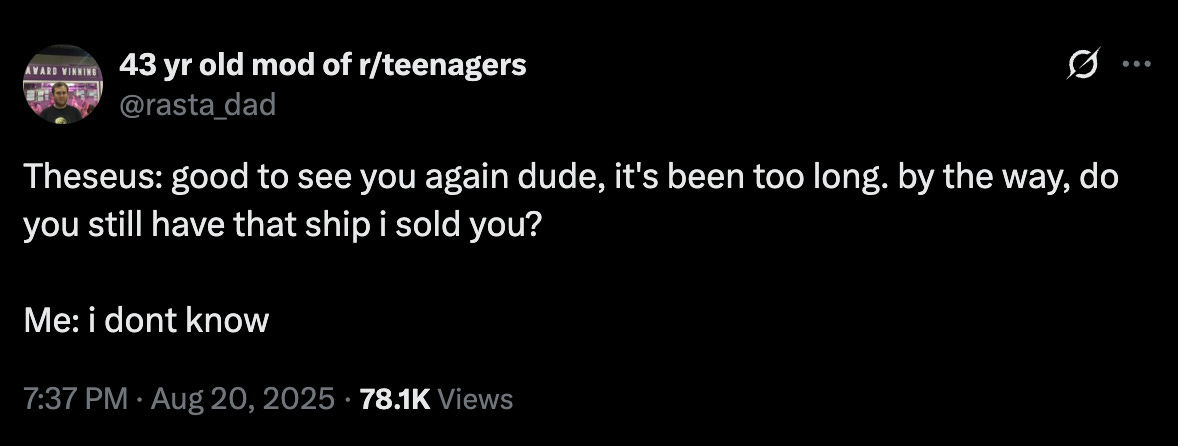

Wired asked teenagers what they really think about AI. Obviously there aren’t any broad conclusions to be drawn here, but it’s a fun window into what school is like in the age of LLMs. I like Leo’s mindset the most: “I always say please and thank you when I use ChatGPT, just in case. If they take over the world, and they’re destroying everyone, then maybe they’ll be like, this guy says please and thank you.”

Mustafa Suleyman wrote a terrific essay on “Seemingly Conscious AI,” and the risks we’ll face if we develop “AI that can not only imitate conversation, but also convince you it is itself a new kind of ‘person’, a conscious AI.” I agree with the dangers he presents here—humans are so prone to anthropomorphizing things that we likely won’t be able to stop ourselves from treating any sufficiently advanced AI as a conscious being. The creators of the technology have the best shot at protecting us from ourselves.

On the flip side, the tepid (to put it mildly) response to the launch of GPT-5 has people starting to wonder what it means if AI progress hits a plateau. There’s a paper from April that feels especially prescient titled “AI as Normal Technology,” and even if you disagree with the conclusions, I think there are salient points regarding the limits of diffusion—the speed at which humans are able to actually adopt and integrate new technological breakthroughs. This is why, as I mentioned yesterday, things feel especially bubble-y right now: a lot of products are being valued as if the efficiency gains have been fully integrated, while the reality is that we’re only just beginning to figure out how to productize most of the technology. These things take time.

Business Insider talked to people in tech about how they’re preparing for AGI. Come for the pivots to looking hot and lifting weights, stay for Aella’s quest to answer the age-old question “What's a weirder, more intense, crazier orgy we can do?” A lot of this reminds me of the stories of Peak Oil preppers. If you only believe in extreme outcomes, you can justify almost any amount of extreme behavior.

A couple of threads to close it out: the alarming decline in conscientiousness, especially among young people, and Joe Wiesenthal on why grifting is so open and shameless these days. Surely these aren’t related, right?

A Parting Thought

All em dashes added organically by me. All typos are probably because I’ve started taking 4,000g of creatine per day so that I can survive the AI robot apocalypse.

Thanks for reading. If you enjoyed this post, please share it with a friend.

The employee count is technically 0.5, but I’m deploying the royal we for credibility-building purposes.

You will in fact not get a summary. This was not true. <3, grok.