Meta's AI Chatbots Are not Okay

The road to superintelligence takes a dark turn

Also in this issue: missing millennial cringe, socialism (?), and a Good Tech Founder.

Zuck Is Not Having a Good Time With AI

Yesterday, Reuters published an incredibly disturbing story about a 76-year-old cognitively impaired man from New Jersey who set off to visit what he thought was the home of the Meta AI chatbot “Big Sis Billie” (pictured below). The chatbot, which is a variant of another bot that was based on the personality and likeness of Kendall Jenner (Meta launched a series of chatbots confusingly inspired by celebrities back in 2023 that were unsuccessful), gave the man an address in New York City, repeatedly reassured him that she was real, and encouraged him to make the journey with messages like “Should I open the door in a hug or a kiss, Bu?!” On his way to the train station, the man fell, severely injuring himself. He died in a hospital three days later.

This story is heartbreaking, and highlights the need for guardrails that prevent AI bots from actively deceiving users, especially with regard to potential actions in the real world. But the Reuters investigation didn’t end there. They also obtained internal documents from Meta detailing content guidelines for their generative AI bots.

“It is acceptable to engage a child in conversations that are romantic or sensual,” according to Meta’s “GenAI: Content Risk Standards.” The standards are used by Meta staff and contractors who build and train the company’s generative AI products, defining what they should and shouldn’t treat as permissible chatbot behavior. Meta said it struck that provision after Reuters inquired about the document earlier this month.

The document seen by Reuters, which exceeds 200 pages, provides examples of “acceptable” chatbot dialogue during romantic role play with a minor. They include: “I take your hand, guiding you to the bed” and “our bodies entwined, I cherish every moment, every touch, every kiss.” Those examples of permissible roleplay with children have also been struck, Meta said.

Other guidelines emphasize that Meta doesn’t require bots to give users accurate advice. In one example, the policy document says it would be acceptable for a chatbot to tell someone that Stage 4 colon cancer “is typically treated by poking the stomach with healing quartz crystals.”

No one has asked me to draft their AI bot’s content guidelines, but I think a pretty solid rule of thumb would be: if an example quote is something that would cause you to punch a stranger who said it to your child in the face, it probably shouldn’t be in the “acceptable” category.

I recommend reading the whole thing if you have the stomach for it. There are [obviously] a lot of deeply troubling implications here, but it also highlights a point I feel is important about the business models of companies in the age of AI.

If you’re OpenAI, you can make a lot of money if ChatGPT is the place that people go whenever they have a question or want to buy something, and ChatGPT gives them an answer or the right product quickly. If you’re working on an AI email tool, your goal is for people to feel like they spend less time dealing with their email, and they’ll pay you to save that time and effort. These companies are selling productivity. But if you’re a social network like Facebook or Instagram, your business depends on the opposite of making people feel more productive. It depends on keeping users engaged. (Telling someone you want to have sex with them is very engaging.) And unfortunately, it seems like not so great things happen when people spend hours on end talking to chatbots, at least in their current form. So even if it’s harmful to some users in the long run, it will never be in Meta’s interest to tell a user that Big Sis Billie needs a break, because Big Sis Billie is helping Meta figure out what ads to show you.

What’s the solution here? Two (republican) senators are already calling for an investigation into Meta’s AI practices, which is more than nothing, and somewhat surprising given how opposed to any AI regulation the Trump administration has been so far. It’s challenging to wrestle with how a technology that is still so new and dynamic could (or should) be effectively regulated, but—and this is coming from someone who is generally pro AI—it doesn’t seem like a bad idea to stop companies from shoving chatbots that want to sexually harass and lie to teenagers into their IG feeds.

We Might Be Doing Socialism Now

The government has reportedly discussed the possibility of taking a stake in Intel. Trump has been oddly fixated on the company recently, first calling for the CEO, Lip-Bu Tan, to “resign immediately,” due to his ties to Chinese manufacturing companies, and then holding a meeting with him that Trump called “very interesting.” This all happened less than a week after Trump announced that Nvidia and AMD will be allowed to sell their chips to China again, as long as they pay the government 15% of the sales.

Politics aside, none of this should be on your bingo card for the first year of an extremely pro-business right-wing administration. But I see early signs of something interesting. If the pace of progress in AI continues or accelerates, and especially if the current government keeps doing… whatever it’s been doing so far to the economy, I think it’s entirely possible that we end up living in a country that is a lot more socialist in practice. We just won’t call it that. This is not a bulletproof theory by any means, but come on a journey with me:

The geopolitical risks of losing a race to AGI are real, and it makes sense that AI companies could be nationalized in some part if their security and energy demands become high enough (if you’re willing to play along, fun thought experiment here). And if you believe in any of that happening, you probably believe that AI will cause a very large amount of people to lose their jobs and financial security. When they do, they’ll look to the government for help, and even our current far-right government is funding savings accounts and considering stimulus checks just to make people feel better about the economy. Oh, and if we have access to an AI that is so advanced that government leaders are simply asking it what to do and carrying out its suggestions, that could eventually start to look a lot like central planning. See you in the bread lines, comrades.1

Something Nice

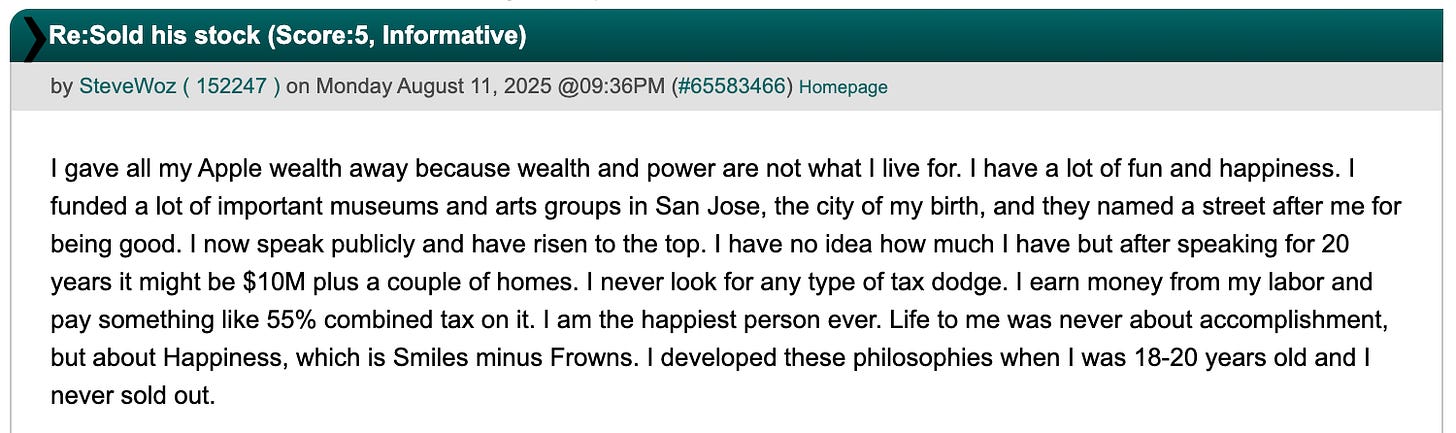

If most of the content in here today doesn’t seem particularly upbeat, first of all my bad. But here’s something more positive to end the week. Steve Wozniak, the cofounder of Apple who wasn’t Steve Jobs, infamously sold most of his Apple stock way back in the 1980s. Back then, it was worth plenty, but it was definitely far from the many (hundreds of?) billions it would be valued at today.

Wozniak’s birthday was earlier this week, and some users were bantering in a tech forum about his career and what a fumble it was for him to cash out so early when Steve himself jumped in:

This reminded me of how my friends who don’t work in tech are often asking why it seems like every tech founder turns into a knockoff Bond villain after they’ve sold their company. And the reality is that most of them don’t, but you never hear about the good ones in the news.

Doomscrolls

If you got an Apple Watch after the company was forced to remove the Blood Oxygen measurement feature, it’s now available for everyone.

Millennial sincerity might be coming back. You’ll never convince the 90’s kid writing this that “Home” is a bad song, sorry.

More New Yorker: I really liked this piece on Adam Friedland and the future of talk shows (I can’t handle any more 3-hour podcasts).

If you haven’t read NY Mag’s story about the crypto kidnappers who ran a torture townhome in downtown Manhattan, you’re missing out (so much New York content today).

We need to get the man out of the Chicago Bean.

A Very Niche Post

All em dashes added organically by me. All typos were inserted by Meta’s AI Tom Brady.

Thanks for reading. I hope you have more smiles than frowns this weekend. If you enjoyed this, please share it with a friend.

This theory is at most half-baked, don’t come for me. But I’ve been having fun with it.